What is CXL ?

Compute Express Link (CXL) is an open industry standard that provides high-bandwidth, low-latency connections between host processors and devices like accelerators, memory buffers, and smart I/O devices..

The Role of CXL in Enhancing Performance

As cloud computing becomes ubiquitous, data centers face an ever-increasing demand for computing capacity and faster processing speeds. To meet these demands, integrating accelerators such as GPUs, FPGAs, and specialized AI processors is essential. These accelerators are typically connected via the PCIe (Peripheral Component Interconnect Express) interface.

However, to optimize the performance of these accelerators within a heterogeneous computing architecture, a more advanced interconnect standard is needed. This is where Compute Express Link (CXL) comes into play.

This is crucial for processing data in emerging applications that require a mix of scalar, vector, matrix, and spatial architectures, which are deployed in CPUs, GPUs, FPGAs, smart NICs, and other accelerators.

How CXL Builds on PCIe: A Deep Dive into Protocol Layers

CXL enhances performance by enabling coherency and memory semantics on top of PCI Express (PCIe) based I/O semantics, optimizing performance for evolving usage models.

Physical Layer :

Shared Infrastructure: CXL uses the same physical and electrical interface as PCIe. This means that CXL devices can be connected using the same connectors, cables, and physical infrastructure as PCIe devices.

Compatibility: By using the PCIe physical layer, CXL ensures compatibility with existing PCIe hardware, making it easier to integrate CXL devices into current systems without needing new physical interfaces.

Protocol Layers:

CXL .io

- CXL .io is based on the PCIe protocol and is responsible for standard I/O operations. It handles configuration, link initialisation and management, device discovery and enumeration, interrupts, DMA (Direct Memory Access), and register I/O access using non-coherent loads/stores.

CXL .cache

- CXL .cache enables coherent memory access between the CPU and the device. It ensures that any changes made to the data in the device’s cache are immediately visible to the CPU and vice versa.

CXL .mem

- CXL .mem allows the host CPU to coherently access device-attached memory with load/store commands.

Types of CXL Devices

CXL devices are categorized into three types, each serving different purposes and offering unique advantages:

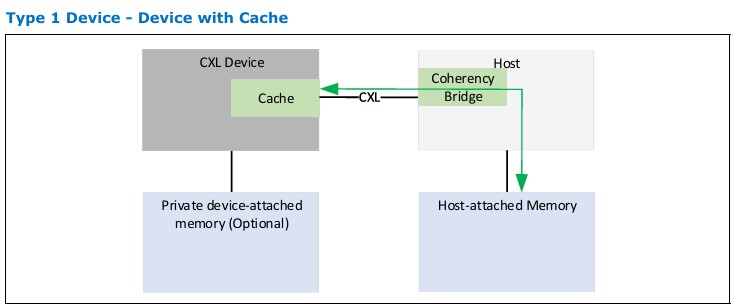

Type 1 CXL Device

These devices implement a fully coherent cache but do not have host-managed device memory.

- Applications: Typically used for accelerators that rely on coherent access to the host CPU’s memory.

- Advantages Over PCIe:

- Coherent Memory Access: Ensures that the data in the device’s cache is consistent with the host CPU’s memory, reducing latency and improving performance for data-intensive tasks.

- Efficient Data Transfer: Supports coherent data transfers, which are more efficient than the traditional PCIe data transfer method.

Example Scenario

Smart NIC Without Coherent Memory Access (PCIe):

- The CPU processes incoming network packets and stores any relevant data or results in its memory (RAM).

- The Smart NIC needs to access this data to offload network processing tasks (e.g., encryption, firewall checks, or packet filtering).

- Data Copying: The data must be copied from the CPU’s memory to the Smart NIC’s memory via the PCIe interface.

- Latency: This copying process introduces latency because data must travel between the CPU and the Smart NIC over PCIe, which may result in delays, especially when processing large volumes of network traffic in real-time.

- Processing Speed: This data transfer and the subsequent delay can slow down the Smart NIC’s ability to offload network tasks, reducing overall system efficiency.

Smart NIC With Coherent Memory Access (CXL):

- The CPU processes the incoming network packets and stores the relevant data in its memory (RAM).

- The Smart NIC, connected via CXL, can directly access the CPU’s memory without the need for explicit data copying.

- Direct Memory Access: The Smart NIC can read and write to the CPU’s memory as if it were its own. This is possible because CXL enables coherent memory, allowing both the CPU and the Smart NIC to access the same memory pool with shared visibility.

- Cache Coherency: Any changes made by the Smart NIC to the CPU’s memory are immediately visible to the CPU and vice versa. This means that the CPU and Smart NIC always have the same up-to-date view of the data, reducing the need for synchronization and further memory copying.

Reduced Latency: By eliminating the need for copying data over PCIe and allowing direct access to memory, latency is significantly reduced, leading to faster processing of network tasks in real-time.

Type 2 CXL Devices

These devices have coherent cache and host-managed device memory (HDM). HDM refers to coherent system address-mapped device-attached memory.

Note : There is an important distinction between HDM and traditional I/O and PCIe Private Device Memory (PDM).

An example of such a device is a GPGPU with attached GDDR. Such devices have treated device-attached memory as private. This means that the memory is not accessible to the Host and is not coherent with the remainder of the system. It is managed entirely by the device hardware and driver and is used primarily as intermediate storage for the device with large data sets. The obvious disadvantage to a model such as this is that it involves high-bandwidth copies back and forth from the Host memory to device-attached memory as operands are brought in and results are written back.

- Applications: Suitable for devices with high-bandwidth memory, such as GPUs and AI accelerators.

- Advantages Over PCIe:

- Flexible Memory Management: Allows for both coherant(Host Bias) and non-coherent memory(Device bias) access, providing flexibility in how memory is managed and accessed.

- High-Bandwidth Data Transfers: Using CXL.mem making it ideal for applications that require large amounts of data to be moved quickly.

Example Scenario

Imagine a data center running a complex AI workload that involves both real-time data processing and large-scale data analytics.

Real-Time Data Processing :

- The CPU processes incoming data and stores it in its memory.

- The GPU, connected via CXL Type 2, needs to access this data to perform real-time inference.

- Using the CXL.cache protocol, the GPU can directly access the CPU’s memory, ensuring that any updates made by the CPU are immediately visible to the GPU.

- This coherent memory access reduces latency and improves the performance of real-time inference tasks.

Large-Scale Data Analytics :

- The CPU offloads a large dataset to the Host Managed Device Memory (HDM) of a CXL Type 2 device.

- The CPU then puts the device into device bias mode, allowing the device to directly access this memory without needing to consult the CPU. We will discuss about device bias in detain in the future blogs.

- The device execute against memory, but their performance comes from having massive bandwidth between the accelerator and device-attached memory. .

Type 3 CXL Devices

These devices only have host-managed device memory and do not implement a coherent cache.

- Applications: Commonly used as memory expanders for the host CPU.

- Advantages Over PCIe:

- Memory Expansion: Enables the host CPU to access additional memory beyond its native capacity, which is particularly useful for memory-intensive applications.

- Reduced Latency: Provides a direct and efficient path for memory access, reducing latency compared to traditional PCIe-based memory expansion solutions.

Example Scenario:

Memory Sharing in a Multi-Host Environment

A data centre is running a complex cloud computing environment where multiple virtual machines (VMs) are hosted across different physical servers. These VMs require access to a large, shared memory pool to efficiently handle data-intensive applications such as big data analytics and AI workloads.

Using CXL Type 3 Device:

- Memory Expansion and Sharing: The data centre switches to using CXL Type 3 devices for memory expansion and sharing.

- Advantages:

- Shared Memory Pool: CXL Type 3 devices facilitate the creation of a shared memory pool accessible by multiple hosts. This capability allows virtual machines (VMs) on different servers to efficiently share memory resources. The implementation of this shared memory pool can be achieved using a CXL switch, which we will explore in more detail in future blog posts.

- Low Latency: CXL provides low-latency access to the shared memory pool, significantly improving the performance of distributed applications.

- High Bandwidth: CXL offers higher bandwidth than PCIe, allowing faster data transfers and better handling of data-intensive workloads.

- Memory Coherency: CXL maintains memory coherency across multiple hosts, ensuring that all servers have a consistent view of the shared memory. This reduces the overhead associated with maintaining data consistency and improves overall efficiency.

Conclusion

In this blog, we introduced Compute Express Link (CXL) and discussed how it leverages the PCIe physical layer to enhance data center performance. We explored the different types of CXL devices and their advantages over traditional PCIe devices, particularly in terms of memory coherency, bandwidth, and latency.

This blog serves as an introduction to CXL. In future posts, we will delve deeper into the low-level details of the CXL protocol and explore how it operates within the example scenarios mentioned above. Stay tuned for more insights.